Invariant Representation (DHB)

Encodes motion in a bidirectional Denavit–Hartenberg space, enabling robust reproduction across spatial configurations.

Leveraging Demonstration Learning and Invariant Representations for Modular Assembly and Cable Manipulation

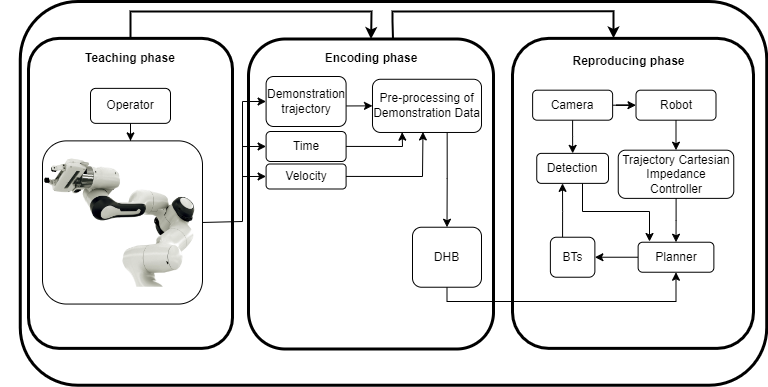

This master’s thesis develops an autonomous robotic system able to manipulate rigid and deformable objects. The approach combines kinesthetic demonstration, invariant trajectory representations (DHB), and vision-based perception to insert Ethernet cables and interact with modular components under real-world constraints.

Status: Completed

Encodes motion in a bidirectional Denavit–Hartenberg space, enabling robust reproduction across spatial configurations.

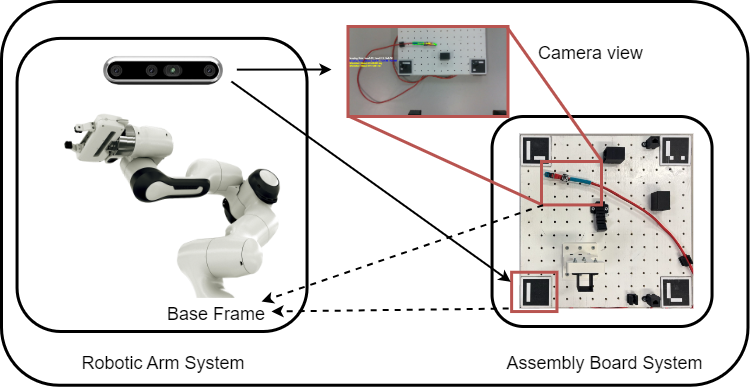

Intel RealSense depth sensing and ArUco markers provide 6D pose estimation and 3D environment awareness.

Board inspired by NIST benchmarks to evaluate task generalization under reconfigurable layouts.

Kinesthetic teaching for intuitive programming of cable manipulation skills.

The robotic platform integrates a Franka Emika Panda arm, Intel RealSense D435i camera, and ROS-based control system. A perception module estimates the 6D pose of target objects, while DHB encodes the trajectory in a space-invariant form. Execution is coordinated through behavior trees and ROS action nodes.

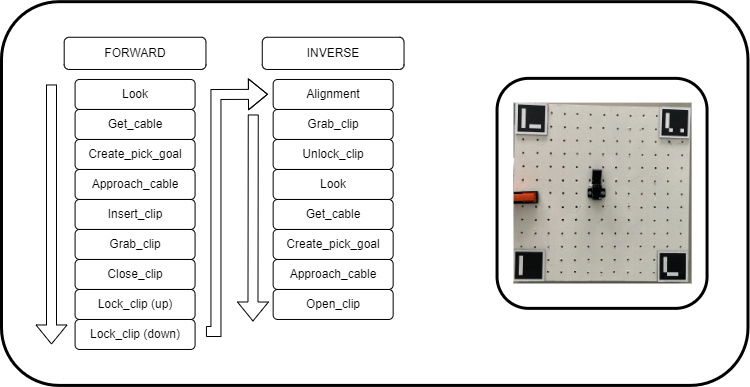

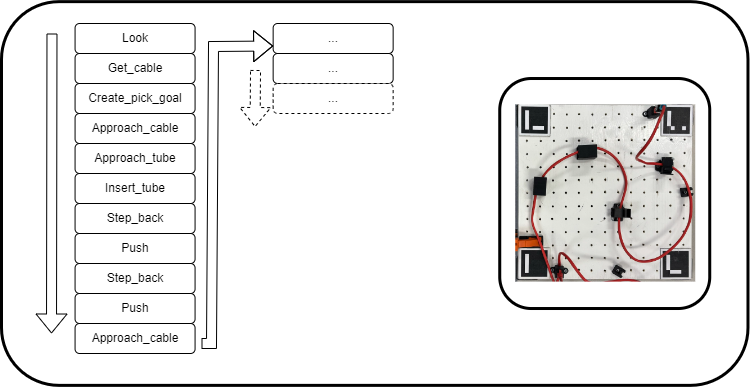

Three experimental scenarios were conducted:

Results indicate the system performs well in structured environments and highlights the challenges of manipulating flexible elements such as cables in unstructured settings.

A single demonstration can be reused in various spatial setups, enabling robust task execution in different configurations thanks to the invariance properties of DHB.

Franka Emika Panda arm for precise motion execution and kinesthetic teaching.

Intel RealSense D435i depth camera + ArUco markers for 6D pose estimation.

ROS, Python, OpenCV, and custom DHB libraries for planning and control.

3D-printed boards and inserts designed for flexible, reconfigurable testing.

The thesis shows that invariant representations can support complex tasks such as cable insertion and modular assembly. DHB facilitates generalization across configurations; future work targets robustness with deformable objects and occlusions.